Scale Invariant Feature Transform (SIFT) [1] is the local feature detection we used in this project. It discovers SIFT keys from input images that describe features in the input images. These SIFT keys are invariant (to a degree) to affine transformations. Given a feature, its SIFT keys can be generated and used to search for the feature in other images. SIFT keys are robust enough to detect a feature even if the 3D object generating this feature is rotated by 20 degrees.

Parametric Feature Detection [2] is the global feature detection algorithm we used in this project. Each feature to be detected is parameterized by a set of values. Given a set of images generated by systematically varying these parameters, a PCA based model of these input images can be learned. By projecting each input image into the lower dimensional space using the PCA model one obtains a set of points. These points define a manifold. Given a new input image, one can project it into the lower dimensional space and determine its distance from this manifold. In the case that the distance is small enough, the nearest points on the manifold can be used to calculated the parameters of the feature that generate the image.

Our implementation of the SIFT feature detection algorithm was very similar to the one proposed in Lowe1999. One difference was that we did not use the best bin first algorithm, since the training database was relatively small. Applying SIFT feature detection to this problem was also quite straightforward. SIFT keys for the 32 training images we created and their descriptors were stored in a database. The keys were extracted for the test images. We find all consistent affine transforms. The transformation with the most consistent keys is selected as the representation transformation. The tranformation has with it an associated object. We return this object as the matching object. The algorithm runs in under a second per frame including time to load the image and database. Scale Invariant Feature Transform (SIFT) is a method for image feature generation. These features, or keys, are the extrema in scale space of the difference-of-Gaussian (DOG) function. This space is created by successively blurring the image, and taking the difference between adjacent blurred images. This makes key extraction particularly fast. After keys are extracted, a description for each key is made using the local image region around the key. In order to make this robust to changes in rotation, a dominant orientation and magnitude must be obtained. A histogram for the gradients of the local region is created. The dominant direction is the bin with the greatest magnitude. Once this is done, the key descriptor is built. This descriptor is inspired by biological processes. Again, histograms are created of the local region. However, this time, the entire histogram is saved. The resulting descriptor is a small vector, which in our case contains 128 elements. In order to do object recognition using SIFT, one must find matching keys. Keys from a test image are matched using the Euclidean distance to keys from an image containing the object to be recognized. Keys that have consistent interpretations, that is, they have to same transforms to training images coordinates, are assumed to come from the same object. The best affine transform from test images coordinates to training images coordinates is found using a least squares method.

Before processing the training images with Eigenfeatures, two steps were performed to pre-process the images. First, the markings on the dog were matted out using a color segmented version of the training image. The segmented image colors various regions of the image as belonging to dog markings, playing field, field markings and background. We did not develop the algorithm to do this. It was developed by the UW Huskies RoboCup team by Griff Hazen. It works by clustering pixel colors based on previously labeled images. The next step was to normalize the matted image. This was meant to help compensate for varying lighting conditions. Once the training images were pre-processed, PCA was used to reduce the dimensionality of the images. For testing purposes we projected the training images into eigenspaces of dimesions 5, 10 and 15.

The pictures below illustrate how the two methods processed examples images from the training set. The average Eigenfeature and the top 15 Eigenfeatures can be viewed here.

Depicted below are four sets of images that illustrate the Eigenfeature algorithm working with the test images. In each set is the source AIBO CCD Image followed by the color segmented image and the matted body markings. Since performance of the Eigenfeatures algorithm is linked to the number of eigenvectors used, we tested the Eigenfeature approach using 5, 10 and 15 eigenvectors. For each case we show the images from the training set that were deemed to be closest to the test image in the lower dimensional eigenspace. The recovered angle is calculated by looking at this closest match and its two neighboring training images (i.e. one notch before and after on the turntable). An interpolated angle is generated by finding the minimum of a parabola fit to the euclidean distance, in the eigenspace, of the test image to these three images.

The distance to the robot is recovered by examining the ratio of the y dimension of the bounding box in the color segmented image to the y dimension of the bounding boxes used in the training images. The distance to the dog is then the distance to the dog in the training images divided by this ratio. This is because the height of the dog relative to the ground plane (which is parallel to the camera plane) does not change and the images undergo a perspective projection (x and y coordinates are divided by the z coordinate).

AIBO CCD Image |

Color Segmented Image |

Matted AIBO CCD Image |

5 Eigen Features Match | Recovered Angle 160.9 | Recovered Distance 45 cm |

10 Eigen Features Match | Recovered Angle 162.3 | Recovered Distance 45 cm |

15 Eigen Features Match | Recovered Angle 162.2 | Recovered Distance 45 cm |

AIBO CCD Image |

Color Segmented Image |

Matted AIBO CCD Image |

5 Eigen Features Match | Recovered Angle 42.2 | Recovered Distance 30 cm |

10 Eigen Features Match | Recovered Angle 99.2 | Recovered Distance 30 cm |

15 Eigen Features Match | Recovered Angle 36.7 | Recovered Distance 30 cm |

AIBO CCD Image |

Color Segmented Image |

Matted AIBO CCD Image |

5 Eigen Features Match | Recovered Angle 136.9 | Recovered Distance 83 cm |

10 Eigen Features Match | Recovered Angle 351.5 | Recovered Distance 83 cm |

15 Eigen Features Match | Recovered Angle 350.7 | Recovered Distance 83 cm |

AIBO CCD Image |

Color Segmented Image |

Matted AIBO CCD Image |

5 Eigen Features Match | Recovered Angle 325.8 | Recovered Distance 98 cm |

10 Eigen Features Match | Recovered Angle 325.8 | Recovered Distance 98 cm |

15 Eigen Features Match | Recovered Angle 323.4 | Recovered Distance 98 cm |

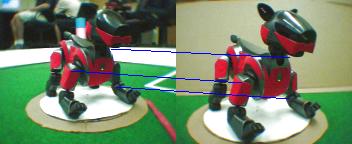

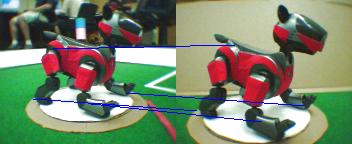

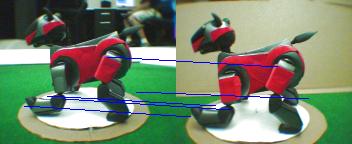

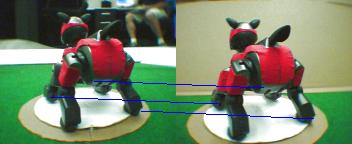

This second set of images shows four examples of AIBO CCD Images and

the image that the SIFT algorithm deemed the best match from within

the training sequence. The blue lines show the locations of SIFT keys

in the test image and their matched keys in the training image.

Unlike the Eigenfeatures approach, SIFT cannot interpolate the angle,

so the resolution of the recovered angle is linked to the number of

training images captured. By decomposing the linear tranformation

solved by the SIFT algorithm into a scale and a rotation, we recover

the scaling of the SIFT keys in the y direction. This scale is used to

recover the estimated distance to the dog by dividing it into the

distance that the training images were captured.

AIBO CCD Image |

Recovered Angle 326.25 | Recovered Distance 41.9356 cm |

AIBO CCD Image |

Recovered Angle 303.75 | Recovered Distance 33.0557 cm |

AIBO CCD Image |

Recovered Angle 90 | Recovered Distance 46.9404 cm |

AIBO CCD Image |

Recovered Angle 157.5 | Recovered Distance 43.596 cm |

Tabulated below are the accuracy results from the experiment. For

each method and each distance we show the number of test images used

and the number of images were the orientation was correctly

recovered. We also show the average recovered distance. As you can

see, when 15 eigenvectors were used, the Eigenfeatures approach

idenfied the pose correctly in all the accurately segmented images.

Unfortunately the SIFT algorithm performed poorly. Once the dog was

situated 75 cm or further away the SIFT algorithm was unable to match

any of the test images.

|

|

||||||||

| Distance |

Number Test

Images |

# Correct Angles

(5 Eigen Features) |

# Correct Angles

(10 Eigen Features) |

# Correct Angles

(15 Eigen Features) |

Average Recovered Distance

(Eigen Features) |

Number Test

Images |

# Correct Angles

(SIFT Features) |

Average Recovered Distance

(SIFT Features) |

| 45 cm | 9 | 5 | 5 | 5 | 45 cm | 9 | 8 | 41.8 cm |

| 35 cm | 5 | 5 | 4 | 5 | 31.1 cm | 5 | 2 | 69.5 cm |

| 75 cm | 5 | 4 | 5 | 5 | 76.7 cm | 5 | 0 | N/A |

| 100 cm | 4 | 3 | 4 | 4 | 103.7 cm | 4 | 0 | N/A |

The SIFT results were somewhat disappointing. We believe that the poor performance was linked to the low resolution of the camera. In preliminary tests using a standard digital camera we were able to recover dog poses at a much higher rate at all scales. Many more keys were found in these early tests. The average number of keys found using the AIBO CCD is 50. In the cases where the test image was recognized, the average number of keys matched was about 4 or 5. The SIFT feature detector is designed to incorporate the data of many keys. This would mean either highly textured images, or high resolution images. In or case, we have neither. This is not a good domain for SIFT feature detection.

Even though the AIBO dog in the test images was missing a leg marking, the Eigenfeatures approach was able to accurately recover pose information. Regardless, there are limitations to the algorithm. To begin with, without a proper color segmented image the algorithm breaks down. When there was a red measuring tape present in the image the algorithm failed to recover pose information correctly.

Future work that could be explored with the Eigenfeatures approach would be to experiment with other degrees of freedom in the dog's pose. Example degrees of freedom would be the head position and the dog is a lying down position. While playing a soccer match the AIBO dogs often violently swing their heads back and forth to kick and locate the ball. Additionally, it would be interesting to deploy the alogrithm on the AIBO dogs themselves. Right now all tests were conducted while both dogs were standing still. We could determine the robustness of the algorithm by allowing one or both of the dogs to move while detection take place.